|

|

|

|

<< content Chapter 13

Self-awareness (part 1)

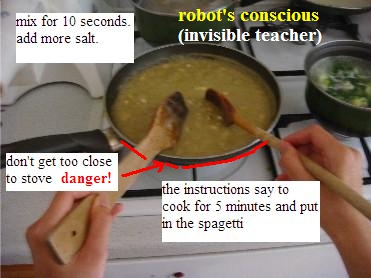

Self-awareness means a human or robot that can control their actions, both mental thought and physical action, in an intelligent manner (with college level intelligence). The primary function of the robot is to pursue actions that will lead to pleasure and stay away from actions that lead to pain. Thus, the robot is self-aware because he is able to manage tasks. This includes: doing a task, doing multiple tasks, doing simultaneous tasks, solving interruptions of tasks, aborting tasks, modifying tasks, scheduling tasks, following rules while doing tasks, etc.

Basically the robot is alive and self-aware as a result of the robot's conscious. The robot's conscious manages tasks. In addition, the robot's conscious can also do other things like observe the environment for danger or unusual events, alert the robot to hazards from the environment, filter out sensed data from the environment, generate facts about objects, provide common sense knowledge, solve problems, extract facts from memory, etc. Referring to FIG 60, all tasks are structured hierarchically. These task range from driving a car to playing video games to making a sandwich. Similar experiences will be stored closer to each other. For example, driving a car is similar to driving a motorcycle so they are hierarchically structured and stored close to each other.

Basically the robot is alive and self-aware as a result of the robot's conscious. The robot's conscious manages tasks. In addition, the robot's conscious can also do other things like observe the environment for danger or unusual events, alert the robot to hazards from the environment, filter out sensed data from the environment, generate facts about objects, provide common sense knowledge, solve problems, extract facts from memory, etc. Referring to FIG 60, all tasks are structured hierarchically. These task range from driving a car to playing video games to making a sandwich. Similar experiences will be stored closer to each other. For example, driving a car is similar to driving a motorcycle so they are hierarchically structured and stored close to each other.

Also, data in the robot's brain are stored and gravitate close to each other based on association. In FIG 60, the playing racing video game pathway will be stored close to driving a real motorcycle because of their similarities. Data like cat and dog will gravitate towards each other in memory because of association.

When all the task pathways self-organize, based on hierarchical similarities and association, universal decision making pathways are created, which form the top of the hierarchy (the robot's brain comprises massive forests and trees). These decision making pathways control and manage tasks for the robot. In other words, the robot is self-aware because of these decision making pathways.

The most important thing is that the decision making pathways are created by lessons learned in school and through personal experience. All decision making pathways are learned from either teachers in school or information in books. As stated numerous times in previous books, my robot's brain does not use machine learning, neural networks, NLP, decision trees, genetic programming, semantic network, predicate calculus or any modern day AI. However, it does use deep learning to break up data in movie sequences (called pathways) so that data can be stored in an optimal manner. Only 10 percent of the robot's data structure is devoted to deep learning. Despite what people say I, in part, discovered deep learning back in 2006 (some of the material in this book were discovered even earlier than that via college papers).

Let's take a look at what kind of pathways are stored in the decision making pathways. If you look at MIT and Stanford University's research papers and books, they focus on mainly recursive tasks. In my invention, teachers teach the robot how to do recursive tasks. Also, storing linear steps in pathways is one way to represent recursive tasks. If the robot is making a sandwich and is doing step1, step2, step3 etc, he is automatically doing recursive tasks. More complex type of recursive tasks are learned from teachers. For example, the teacher can teach the robot to manage complex recursive tasks by writing things down. If the robot has to do a task that has 200 recursive sub-tasks he can write things down on a piece of paper. He can mark off completed tasks and see which tasks to do next, or which tasks to abort, or modify, etc. For simple recursive tasks, the robot can use his brain to remind himself what tasks to do.

Referring to FIG 60, pathway20 is doing simultaneous tasks. Teachers teach the robot to do task1, stop, do task2, stop, continue task1, stop, continue task2, stop, continue task1, etc. The robot has to repeat this until both task1 and task2 are completed.

Referring to FIG 62, pathway36 is doing a task quickly or at a speed. Teachers teach the robot how to speed up or slow down a task. Through repeated experience, the robot creates a universal pathway in memory that can do any given task quickly. Teachers also teach about the consequences of speeding up a task. For example, if the sandwich is done too quickly, the robot might make mistakes like drop the lettuce or adding too much tomato sauce. Only through personal experience and learning a wide variety of task to speed up can the robot truly refine his skills.

Also, data in the robot's brain are stored and gravitate close to each other based on association. In FIG 60, the playing racing video game pathway will be stored close to driving a real motorcycle because of their similarities. Data like cat and dog will gravitate towards each other in memory because of association.

When all the task pathways self-organize, based on hierarchical similarities and association, universal decision making pathways are created, which form the top of the hierarchy (the robot's brain comprises massive forests and trees). These decision making pathways control and manage tasks for the robot. In other words, the robot is self-aware because of these decision making pathways.

The most important thing is that the decision making pathways are created by lessons learned in school and through personal experience. All decision making pathways are learned from either teachers in school or information in books. As stated numerous times in previous books, my robot's brain does not use machine learning, neural networks, NLP, decision trees, genetic programming, semantic network, predicate calculus or any modern day AI. However, it does use deep learning to break up data in movie sequences (called pathways) so that data can be stored in an optimal manner. Only 10 percent of the robot's data structure is devoted to deep learning. Despite what people say I, in part, discovered deep learning back in 2006 (some of the material in this book were discovered even earlier than that via college papers).

Let's take a look at what kind of pathways are stored in the decision making pathways. If you look at MIT and Stanford University's research papers and books, they focus on mainly recursive tasks. In my invention, teachers teach the robot how to do recursive tasks. Also, storing linear steps in pathways is one way to represent recursive tasks. If the robot is making a sandwich and is doing step1, step2, step3 etc, he is automatically doing recursive tasks. More complex type of recursive tasks are learned from teachers. For example, the teacher can teach the robot to manage complex recursive tasks by writing things down. If the robot has to do a task that has 200 recursive sub-tasks he can write things down on a piece of paper. He can mark off completed tasks and see which tasks to do next, or which tasks to abort, or modify, etc. For simple recursive tasks, the robot can use his brain to remind himself what tasks to do.

Referring to FIG 60, pathway20 is doing simultaneous tasks. Teachers teach the robot to do task1, stop, do task2, stop, continue task1, stop, continue task2, stop, continue task1, etc. The robot has to repeat this until both task1 and task2 are completed.

Referring to FIG 62, pathway36 is doing a task quickly or at a speed. Teachers teach the robot how to speed up or slow down a task. Through repeated experience, the robot creates a universal pathway in memory that can do any given task quickly. Teachers also teach about the consequences of speeding up a task. For example, if the sandwich is done too quickly, the robot might make mistakes like drop the lettuce or adding too much tomato sauce. Only through personal experience and learning a wide variety of task to speed up can the robot truly refine his skills.

Referring to FIG 64, pathway42 is a very important pathway because the robot is trying to combine knowledge from 2 or more tasks (pathways). Scientists from MIT and Stanford University have been trying to teach their robots to learn information in a bootstrapping manner. If the robot learns simple math like addition, how can he use that knowledge to solve a problem? In another example, if the robot learns what a binary tree is, how can he use that knowledge to write a customer database system?

Humans learn math through a bootstrapping manner, whereby information builds on top of each other to form complex intelligence. First we learn algebra, then we take that knowledge to learn trigonometry. Next, we take trigonometry to learn calculus. Then, we take calculus to learn discrete math or computer science.

Referring to FIG 64, pathway42 is a very important pathway because the robot is trying to combine knowledge from 2 or more tasks (pathways). Scientists from MIT and Stanford University have been trying to teach their robots to learn information in a bootstrapping manner. If the robot learns simple math like addition, how can he use that knowledge to solve a problem? In another example, if the robot learns what a binary tree is, how can he use that knowledge to write a customer database system?

Humans learn math through a bootstrapping manner, whereby information builds on top of each other to form complex intelligence. First we learn algebra, then we take that knowledge to learn trigonometry. Next, we take trigonometry to learn calculus. Then, we take calculus to learn discrete math or computer science.

Referring to FIG 64, pathway42 is taking 2 tasks and combining them. A simple example would be: task1 is to manage bullets in call of duty. task2: do addition. if the first task is to manage bullets in call of duty, then the robot has to use bullets as variables when doing the addition problem. Let's say the robot (the player) picks up 2 gun clips from a house, he obviously has to keep track of the amount of bullets he has in his gun so he must do simple math, mainly addition. The robot currently has 40 bullets in his gun and each gun clip has 15 bullets. since he has 2 gun clips the equation will look like this: 40 +15 +15 = 70. Thus, currently the robot has 70 bullets in his gun.

Referring to FIG 64, pathway42 is taking 2 tasks and combining them. A simple example would be: task1 is to manage bullets in call of duty. task2: do addition. if the first task is to manage bullets in call of duty, then the robot has to use bullets as variables when doing the addition problem. Let's say the robot (the player) picks up 2 gun clips from a house, he obviously has to keep track of the amount of bullets he has in his gun so he must do simple math, mainly addition. The robot currently has 40 bullets in his gun and each gun clip has 15 bullets. since he has 2 gun clips the equation will look like this: 40 +15 +15 = 70. Thus, currently the robot has 70 bullets in his gun.

In this case, bullets is a variable and it is used to plug into the addition problem. This is how the robot takes knowledge like addition and apply that knowledge to a given problem. Pathway42 showcase a more complex example. Task1 is to write a customer database system and task2 is to use knowledge on binary trees. Now, the robot has to take knowledge learned about binary trees, which is an algorithm, and apply it to build a customer database system. Obviously the robot has to identify variables and functions from the customer database system and insert them into a binary tree. For example, the nodes in a binary tree will represent customer information and the search operation will be A-M is used for the left search and N-Z is used for the right search.

Thus, learning information in terms of a bootstrapping process is actually a learned thing. It is learned from teachers in school. This is important because some AI scientists think genetic programming or decision trees is the key to this type of self learning. It isn't.

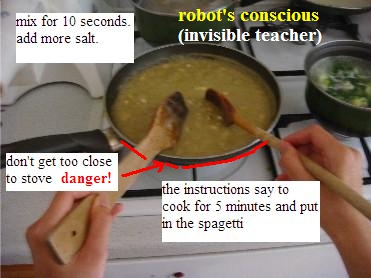

In conclusion the human brain isn't special. All intelligent thinking from a human brain comes from knowledge learned in school and there is no divine angel that is giving us information. The voice in a human's mind is like an invisible teacher that: gives information, make decisions, alert the host for danger, observe, generate common sense knowledge, predict the future, schedule tasks, etc. This invisible teacher, which exist in a human mind, was created from a lifetime worth of learning from school, through personal experience, and knowledge from books.

In this case, bullets is a variable and it is used to plug into the addition problem. This is how the robot takes knowledge like addition and apply that knowledge to a given problem. Pathway42 showcase a more complex example. Task1 is to write a customer database system and task2 is to use knowledge on binary trees. Now, the robot has to take knowledge learned about binary trees, which is an algorithm, and apply it to build a customer database system. Obviously the robot has to identify variables and functions from the customer database system and insert them into a binary tree. For example, the nodes in a binary tree will represent customer information and the search operation will be A-M is used for the left search and N-Z is used for the right search.

Thus, learning information in terms of a bootstrapping process is actually a learned thing. It is learned from teachers in school. This is important because some AI scientists think genetic programming or decision trees is the key to this type of self learning. It isn't.

In conclusion the human brain isn't special. All intelligent thinking from a human brain comes from knowledge learned in school and there is no divine angel that is giving us information. The voice in a human's mind is like an invisible teacher that: gives information, make decisions, alert the host for danger, observe, generate common sense knowledge, predict the future, schedule tasks, etc. This invisible teacher, which exist in a human mind, was created from a lifetime worth of learning from school, through personal experience, and knowledge from books.

My goal for this book is to explain how the human brain works and how to build a digital brain comparable to human level intelligence. In my opinion I have succeeded; and I'm 100 percent sure my invention filed back in 2006 is the correct AI software. Content on this page explains in general how my invention works and what it does. The actual software is really really long and complicated. Refer to my books for detailed information and examples.

My goal for this book is to explain how the human brain works and how to build a digital brain comparable to human level intelligence. In my opinion I have succeeded; and I'm 100 percent sure my invention filed back in 2006 is the correct AI software. Content on this page explains in general how my invention works and what it does. The actual software is really really long and complicated. Refer to my books for detailed information and examples.

Self-awareness (part 2)

As stated above, decision making pathways manage tasks for the robot. However, there are many other things these decision making pathways can do, such as:

1. learning a task (learning a new skill like driving a car or cooking a lobster dish)

2. practicing a task.

3. usage of a task/s

4. modification of a task.

1. For the first one, the decision making pathways can learn a new task (or skill), such as playing poker or making a sandwich. It can also learn complex or abstract knowledge like binary trees or linked lists.

Teachers in school teach the robot "how to teach itself" to learn a new skill. If the robot knows nothing about soccer, he has to seek out info in books or PE lectures to learn the objectives and rules of soccer. He was taught by teachers to identify goals and procedures so he will know what to do at any given time (called procedures). Then, he has to identify the rules of the game so regulations are followed while playing soccer. This can be had by reading books or observing demonstrations, like a teachers' lecture.

Referring to FIG. 32, after being taught 3 different sports game by a teacher, the robot develops a universal pathway to learn a game, called pathway65. Pathway65 was created because the robot was taught how to play soccer, football, and basketball. In all 3 sports, the instructions to learn are the same or similar. All three sports have these same or similar instructions. Because the learn instructions were so similar, the 3 pathways (J1, J2, and J3) self-organized and created pathway65 as a result.

Now, pathway65 is known as the learning a game pathway and is considered a decision making pathway. Any new game that the robot doesn't know, his brain will use pathway65 to learn. For example, if the robot doesn't know how to cook a lobster dish, his brain will extract pathway65 to learn this new skill.

2. To be able to understand the goals and rules of a game is one thing, but actually playing the game is something completely different. So, practicing a skill is very important because it allows the robot to refine its skills and become an expert in it. FIG 21A is a diagram depicting a learn a task pathway and FIG. 21B is a diagram depicting a practice pathway (which is also a decision making pathway). The learn a task pathway id and stores the knowledge of a task; and the practice pathway id and stores the linear instructions in pathways.

Now, pathway65 is known as the learning a game pathway and is considered a decision making pathway. Any new game that the robot doesn't know, his brain will use pathway65 to learn. For example, if the robot doesn't know how to cook a lobster dish, his brain will extract pathway65 to learn this new skill.

2. To be able to understand the goals and rules of a game is one thing, but actually playing the game is something completely different. So, practicing a skill is very important because it allows the robot to refine its skills and become an expert in it. FIG 21A is a diagram depicting a learn a task pathway and FIG. 21B is a diagram depicting a practice pathway (which is also a decision making pathway). The learn a task pathway id and stores the knowledge of a task; and the practice pathway id and stores the linear instructions in pathways.

In this example, FIG. 21B, the robot is cooking a lobster dish. He is following and trying to remember the steps to accomplish the task. In some cases, the pathway has a for-loop at the end and it will loop itself until a condition is met. The condition is to commit to memory the entire steps of cooking a lobster dish. After several tries, the robot becomes an expert in the task and within the cook lobster dish pathway are the exact steps and rules to make a lobster dish. The more the robot practices the better it becomes.

3. Referring to FIG. 21A, the important thing is task pathways are different from decision making pathways. For example, the decision making pathway to learn a skill (pathway65) is different from the making lobster dish pathway (C5). Pathway65 is there to make the robot learn a skill. The end result is pathway C5, which contains all the knowledge needed to make a lobster dish, which includes: goals, rules, linear steps, safety rules, basic cooking knowledge, etc.

Now that pathway C5 is created in memory, another decision making pathway is used to "utilize" C5 task.

Referring to FIG 21C, pathway20 is a decision making pathway to do 2 simultaneous tasks at the same time. Pathway20 is referencing data from pathway C5, to make a lobster dish. The robot is actually making a lobster dish and talking on the phone at the same time and trying to balance between 2 tasks.

In this example, FIG. 21B, the robot is cooking a lobster dish. He is following and trying to remember the steps to accomplish the task. In some cases, the pathway has a for-loop at the end and it will loop itself until a condition is met. The condition is to commit to memory the entire steps of cooking a lobster dish. After several tries, the robot becomes an expert in the task and within the cook lobster dish pathway are the exact steps and rules to make a lobster dish. The more the robot practices the better it becomes.

3. Referring to FIG. 21A, the important thing is task pathways are different from decision making pathways. For example, the decision making pathway to learn a skill (pathway65) is different from the making lobster dish pathway (C5). Pathway65 is there to make the robot learn a skill. The end result is pathway C5, which contains all the knowledge needed to make a lobster dish, which includes: goals, rules, linear steps, safety rules, basic cooking knowledge, etc.

Now that pathway C5 is created in memory, another decision making pathway is used to "utilize" C5 task.

Referring to FIG 21C, pathway20 is a decision making pathway to do 2 simultaneous tasks at the same time. Pathway20 is referencing data from pathway C5, to make a lobster dish. The robot is actually making a lobster dish and talking on the phone at the same time and trying to balance between 2 tasks.

4. Let's say that the robot learned how to play soccer in PE from school. 10 years later, that knowledge is forgotten and only vague facts are still stored in memory. In order to gain the full knowledge back about soccer, the robot's brain has to use the learn pathway and the practice pathway again to remember and relearn the game of soccer.

In FIG 67, as you can clearly see after using the learned pathway and the practice pathway, the soccer knowledge is restored back to full capacity. The robot forget information in order not to overload its memory bank. This ensures that only important things are stored in memory and minor things are forgotten. Also, forgetting allows the robot to live for millions or billions of years without needing additional harddrives for its memory bank.

4. Let's say that the robot learned how to play soccer in PE from school. 10 years later, that knowledge is forgotten and only vague facts are still stored in memory. In order to gain the full knowledge back about soccer, the robot's brain has to use the learn pathway and the practice pathway again to remember and relearn the game of soccer.

In FIG 67, as you can clearly see after using the learned pathway and the practice pathway, the soccer knowledge is restored back to full capacity. The robot forget information in order not to overload its memory bank. This ensures that only important things are stored in memory and minor things are forgotten. Also, forgetting allows the robot to live for millions or billions of years without needing additional harddrives for its memory bank.

Details on what is stored in the hierarchical trees (self-creating computer programs)

Details on what is stored in the hierarchical trees (self-creating computer programs)

There are 2 things that are stored in pathways, structured in hierarchical trees:

1. computer functions and operations

2. time estimation for events and probability and possibility of events occurring.

1. Self-creating computer programs stored in pathways.

School teachers teach the robot "to teach itself how to learn and play" a game. In FIG. 45A the sentences in pathway43 is telling the robot's brain to create 3 containers, one for goals, another one for linear procedures and the third one for rules. The robot learns these goals/procedures and rules and put them in their respective containers.

"in all games there are goals, procedures, and rules. you have to identify all 3 in order to play a game. understand these goals and rules. Usually, during the game you have to do linear procedures and at the same time follow rules. your goal is to win the game."

These sentences configure data in an organized manner. In some ways, it's generating a self-creating semantic network of data of various data types (based on human 5 senses). Not only are the data semantically organized in an optimal way, but search functions to find information is also created. It's like a self-creating search engine.

Further more, the sentences tell the robot what to do during the game (FIG. 45B). in this case, the instructions act as a self-creating operating system: to do procedures, and at the same time, follow rules. the goal is to win the game.

Further more, the sentences tell the robot what to do during the game (FIG. 45B). in this case, the instructions act as a self-creating operating system: to do procedures, and at the same time, follow rules. the goal is to win the game.

All this is done through English sentences. For example, the sentences below:

"the goal of chess is to take turns playing the game until someone's king is eliminated. while playing the game you have to follow all the rules. here are the rules. remember them and follow each one."

All this is done through English sentences. For example, the sentences below:

"the goal of chess is to take turns playing the game until someone's king is eliminated. while playing the game you have to follow all the rules. here are the rules. remember them and follow each one."

is telling the robot everything he needs to know about the game of Chess. These sentences are spoken by a teacher and the responsibility of the robot is to identify key facts. Once key facts are identified, the robot will use common sense to determine what to do in the game. For example, the procedure of the game is to take turns with an opponent and the goal is to eliminate the opponents' king. Thus, these instruction sentences given to the robot creates objectives and procedures in his mind.

Thus, the next time the robot is playing chess, facts will start to pop up in his mind, such as:

1. the procedure of the game is to take turns with opponent.

2. the goal of chess is to eliminate your opponents' king.

3. here are the rules (via visual images) for each chess piece. follow these rules when moving each chess piece. the horse piece can only move in a L-shape direction, the king can only move one step in any direction, etc.

4. predict the future by imagining future steps for me and opponent and come up with good moves.

5. make your moves quickly, this includes predicting future moves for me and opponent and selecting the best move to win the game.

These sentences create a computer program inside the robot's mind to understand goals, procedures, rules, solving conflicts of rules, searching for data in memory regarding chess, etc. These sentences set up the semantic network to store and retrieve data, set up the linear steps to play chess, the goals and rules, and so forth.

In the game of chess, there're many previously learned skills. For example, the sentence: "the procedure of the game is to take turns with opponent", has a meaning. That sentence encapsulate a whole new skill (another task pathway) that is previously learned by the robot. He might have learned a similar game, like checkers, and he is using that skill to take turns in chess.

In the sentence: "predict the future by imagining future steps for me and opponent and come up with good moves.", is creating a function to predict the future by imagining future moves by both the robot and the opponent and determining which move to make based on long term benefits. This is 1 of 2 ways my robot's brain predicts the future. The 2nd way to predict the future is the built in future prediction function in the robot's brain.

In FIG. 56, the hierarchical tree is structuring the self-creating computer program in a hierarchical manner. At the top of the tree are general self-creating computer programs and at the bottom are specific self-creating computer programs. For example, E2 contains a general self-creating computer program to play any game. E5 contains a specific self-creating computer program to play the game of chess. The lower nodes (task pathways) inherit all data from higher level nodes of the tree. In other words, task pathways share data in a hierarchical tree.

In FIG. 56, the hierarchical tree is structuring the self-creating computer program in a hierarchical manner. At the top of the tree are general self-creating computer programs and at the bottom are specific self-creating computer programs. For example, E2 contains a general self-creating computer program to play any game. E5 contains a specific self-creating computer program to play the game of chess. The lower nodes (task pathways) inherit all data from higher level nodes of the tree. In other words, task pathways share data in a hierarchical tree.

In the learning a task pathway and the practice pathway, the robot is using common sense, observing the environment and decision making to seek out important facts to store in memory (FIG. 17A and FIG. 17B). The robot might learn chess by reading a 30 page book. He has to use decision making pathways to identify only the most important facts to store in his memory. The learn a task pathway stores the knowledge, while the practice pathway stores the linear actions to play chess. Let's show an example of a practice pathway and how it learns to play chess. Below is a list of thoughts from the robot's mind to create and store the right instructions in task pathways for the game of chess:

question: what is the goal again?

recall: the goal of chess is to eliminate your opponents' king.

fact recall: all the rules and procedures pop up in the robot's mind

question: i'm starting the game, what do i do first?

answer: oh, i have to take turns with opponent to make moves.

fact: oh, i have to predict future moves for me and opponent and make a move quickly.

recall: the rules say i have to flip a coin to determine who starts first.

common sense: I win, this means i go first.

ok, the pawn can move like this. the horse can move like that, the bishop moves like this.

-pic1

In the learning a task pathway and the practice pathway, the robot is using common sense, observing the environment and decision making to seek out important facts to store in memory (FIG. 17A and FIG. 17B). The robot might learn chess by reading a 30 page book. He has to use decision making pathways to identify only the most important facts to store in his memory. The learn a task pathway stores the knowledge, while the practice pathway stores the linear actions to play chess. Let's show an example of a practice pathway and how it learns to play chess. Below is a list of thoughts from the robot's mind to create and store the right instructions in task pathways for the game of chess:

question: what is the goal again?

recall: the goal of chess is to eliminate your opponents' king.

fact recall: all the rules and procedures pop up in the robot's mind

question: i'm starting the game, what do i do first?

answer: oh, i have to take turns with opponent to make moves.

fact: oh, i have to predict future moves for me and opponent and make a move quickly.

recall: the rules say i have to flip a coin to determine who starts first.

common sense: I win, this means i go first.

ok, the pawn can move like this. the horse can move like that, the bishop moves like this.

-pic1

logical thoughts: select important pieces to predict. look for my pieces that are in danger and look for pieces from opponent to kill. Randomly scan the pieces (strategy1).

these are the 4 moves i predicted. times up i got to make a choice now.

logical thoughts: move1 is better than move2. move3 is better than move4 and move3 is better than move1. I select move1.

fact: it's opponents turn. continue to observe and predict future moves (predict opponent move more than my move).

logical thoughts: select important pieces to predict. look for my pieces that are in danger and look for pieces from opponent to kill. Randomly scan the pieces (strategy1).

these are the 4 moves i predicted. times up i got to make a choice now.

logical thoughts: move1 is better than move2. move3 is better than move4 and move3 is better than move1. I select move1.

fact: it's opponents turn. continue to observe and predict future moves (predict opponent move more than my move).

Notice that the thoughts of the robot are like a group of people in a room, asking questions, answering questions, providing logical facts, deciding on actions, predicting the future, generating common sense, etc.

Above are the linear thoughts of the robot's mind, which are stored in the pathways along with 5 sense data, hidden data, and pattern data. After using the practice pathway for several tries, the robot will have the required knowledge to play chess. If he practices for years, he will become an expert in the game. The practice pathway ensures the chess pathways contain optimal strategies and knowledge to play the game. The more the robot practices the more optimal strategies are generated and stored in the chess pathways. If the robot discovers a strategy that is better than an old strategy, the better strategy will be remembered and the old strategy will be forgotten.

robot's thoughts: i discovered a new strategy5. strategy5 is better than strategy3 or strategy1. So, in this situation use strategy5 next time.

robot's thoughts: remember strategy5 and forget strategy1 or strategy3 in situation7.

2. Time estimation events and possibility and probability

FIG. 61 depicts a sandwich pathway and shows the linear steps to make a sandwich. Most of the time steps are represented by sound sentences. Other times itís represented by visual images or movie sequences. In each step, the sandwich pathway contains an estimation of the beginning and ending. Thus, pathways store the estimation of events.

FIG. 53A depicts a sandwich pathway that shows different possibilities. Making a lobster sandwich is different from making a meatball sandwich. They share some steps, but some steps are different. The sandwich pathways store possibilities (if they are repeated experiences). And each possibility has a weight of the probability of occurring in the future. The more experiences the robot has with an event the more points added to the connection weights.

FIG. 53A depicts a sandwich pathway that shows different possibilities. Making a lobster sandwich is different from making a meatball sandwich. They share some steps, but some steps are different. The sandwich pathways store possibilities (if they are repeated experiences). And each possibility has a weight of the probability of occurring in the future. The more experiences the robot has with an event the more points added to the connection weights.

Referring to FIG. 67, let's tie this in with the self-creating computer program stored in pathways. In this case we have to use driving as an example. As the robot learns how to drive, he is constantly doing a task: "drive between the 2 white lines and watch out for danger objects". This instruction is stored in the driving pathways and is constantly being reminded (permanent instruction) because the robot is repeatedly following this rule.

Referring to FIG. 67, let's tie this in with the self-creating computer program stored in pathways. In this case we have to use driving as an example. As the robot learns how to drive, he is constantly doing a task: "drive between the 2 white lines and watch out for danger objects". This instruction is stored in the driving pathways and is constantly being reminded (permanent instruction) because the robot is repeatedly following this rule.

Referring to FIG. 89, using the rules for traffic lights has occurred every 2 minutes based on hundreds of hours of driving. So, the robot's brain created an if-then statement from observing similar driving pathways. When the robot is driving, these operations are created:

if id traffic light follow rule4. rule4: green light is go, red light is stop, and yellow light is null.

sub-levels:

if id traffic light and car is going straight, do this ...

if id traffic light and car is turning, do that ...

if id traffic light and in the middle of a dessert, do this ...

Thus, the driving pathways are observed by the robot's brain and patterns are found. Most likely complicated if-then statements are stored next to pathways because in driving school and books, they teach students using if-then statements.

The above example shows pathways store complex computer functions along with possibilities and probabilities of events. The computer functions store things like if-then statements, conditional loops, procedures, recursive functions, hierarchical functions, and event probability and possibility.

After many driving experiences, the robot will have a computer program stored in the driving pathways. Most likely the robot will have a linear procedure running as an operating system and activate if-then statements to take action.

FIG.69 depicts a general procedure to drive. At the beginning of the procedure the robot has to check the car for safety like adjust the rear view mirror and check for gas meter. Next, the robot has to identify the destination location and plot the fastest route to its location. Maybe sometimes the robot will plot routes in segments if the destination is a long trip. After the robot gets to its destination location he has to do 2 other tasks, which is find parking and check the car before shutting down. Examples of checking the car would include: make sure the shift gear is set to neutral and make sure the doors are locked before getting out of the car.

Referring to FIG. 89, using the rules for traffic lights has occurred every 2 minutes based on hundreds of hours of driving. So, the robot's brain created an if-then statement from observing similar driving pathways. When the robot is driving, these operations are created:

if id traffic light follow rule4. rule4: green light is go, red light is stop, and yellow light is null.

sub-levels:

if id traffic light and car is going straight, do this ...

if id traffic light and car is turning, do that ...

if id traffic light and in the middle of a dessert, do this ...

Thus, the driving pathways are observed by the robot's brain and patterns are found. Most likely complicated if-then statements are stored next to pathways because in driving school and books, they teach students using if-then statements.

The above example shows pathways store complex computer functions along with possibilities and probabilities of events. The computer functions store things like if-then statements, conditional loops, procedures, recursive functions, hierarchical functions, and event probability and possibility.

After many driving experiences, the robot will have a computer program stored in the driving pathways. Most likely the robot will have a linear procedure running as an operating system and activate if-then statements to take action.

FIG.69 depicts a general procedure to drive. At the beginning of the procedure the robot has to check the car for safety like adjust the rear view mirror and check for gas meter. Next, the robot has to identify the destination location and plot the fastest route to its location. Maybe sometimes the robot will plot routes in segments if the destination is a long trip. After the robot gets to its destination location he has to do 2 other tasks, which is find parking and check the car before shutting down. Examples of checking the car would include: make sure the shift gear is set to neutral and make sure the doors are locked before getting out of the car.

So this is the general procedure the robot is following while driving the car. At the same time the robot will use if-then statements and facts to drive safely. Most likely, he will execute if-then operations to follow rules. For example, if the robot id a traffic light and his intention is to turn left, these rules will activate in his mind:

task: if id traffic light follow rule4. rule4: green light is go, red light is stop, and yellow light is null.

sub-task:

if id traffic light and car is turning, do this ...

So this is the general procedure the robot is following while driving the car. At the same time the robot will use if-then statements and facts to drive safely. Most likely, he will execute if-then operations to follow rules. For example, if the robot id a traffic light and his intention is to turn left, these rules will activate in his mind:

task: if id traffic light follow rule4. rule4: green light is go, red light is stop, and yellow light is null.

sub-task:

if id traffic light and car is turning, do this ...

Solving conflicts of rules are done using logical inferencing on the driving rules container. These types of inferencing are taught by teachers in school when dealing with complex if-then statements (learned previously). For example, if the robot is driving and he ids the traffic light and his intentions are to make a left turn, something unexpected might happen, like police sirens coming from behind the car. In the rules container is a rule that states the driver has to stop all activities and let the police car/s pass. Therefore, the robot aborts all future tasks and does a brand new task, which is to pull over to the side of the road and let the police car pass.

However, most driving rules are simple. The robot will follow the procedures of driving and at the same time execute if-then operations to follow rules. If the robot is in sitaution4 do task3. If the robot is in situation8 do these tasks. The more complex rules are accomplished using logical inferencing. Below are examples of logical inferencing for driving a car:

if i'm in situation1 and situation4, then i have to do task9.

if i'm in situation3 and situaton7 and situation4, but situation7 has top priority, then i have to do task1 and task3.

Doing a task like driving is very complex. The robot will be using the learning pathway and the practice pathway to learn to drive. After several weeks of driving, the robot will have optimal driving pathways to drive a car in its brain. Inside the driving pathways is a self-created computer program, equipped with a self-created database of facts, procedures and rules on driving.

The knowledge to drive a car using human intelligence.

A skill like driving a car is thousands of times more complex than playing chess. There are more rules, more goals, and safety rules to follow. How does a human learn to drive? We learn to drive from both driving teachers and books.

For the robot, he will use the learning a skill pathway to learn the goals and rules of driving. The robot will use logic and common sense to id the most important information in books or lectures. The sentences from driving books self-organize in a self-creating semantic network (FIG. 45A). Information will be stored optimally and visually. The goals are stored here and the rules are stored there.

Since the rules of driving is plenty, in the rules container are even more organized structures to store operational functions, recursive rules, hierarchical rules, solving conflicting if-then statements, and nested-if-then statements.

The learning pathways store optimal search functions to look for data quickly in this driving semantic network. These search functions are also self-created.

Next, the practice pathway is used to create and store linear instructions in driving pathways (FIG. 45B). As usual, the robot's mind will use common sense knowledge to create and store instructions in pathways. After months of driving and trial and error, the robot creates optimal driving pathways to drive a car, perfectly.

The learning pathways and the practice pathways create the pathways to drive a car in the robot's brain. Once the data is there, the robot can use another decision making pathway to "use" that knowledge (driving task). In FIG. 45C is a diagram depicting a decision making pathway20 to use 2 different tasks (driving a car and talking on the phone). This decision making pathway20 is doing 2 simultaneous tasks at the same time.

For the robot, he will use the learning a skill pathway to learn the goals and rules of driving. The robot will use logic and common sense to id the most important information in books or lectures. The sentences from driving books self-organize in a self-creating semantic network (FIG. 45A). Information will be stored optimally and visually. The goals are stored here and the rules are stored there.

Since the rules of driving is plenty, in the rules container are even more organized structures to store operational functions, recursive rules, hierarchical rules, solving conflicting if-then statements, and nested-if-then statements.

The learning pathways store optimal search functions to look for data quickly in this driving semantic network. These search functions are also self-created.

Next, the practice pathway is used to create and store linear instructions in driving pathways (FIG. 45B). As usual, the robot's mind will use common sense knowledge to create and store instructions in pathways. After months of driving and trial and error, the robot creates optimal driving pathways to drive a car, perfectly.

The learning pathways and the practice pathways create the pathways to drive a car in the robot's brain. Once the data is there, the robot can use another decision making pathway to "use" that knowledge (driving task). In FIG. 45C is a diagram depicting a decision making pathway20 to use 2 different tasks (driving a car and talking on the phone). This decision making pathway20 is doing 2 simultaneous tasks at the same time.

As the reader can see Iím not using any AI methods from autonomous cars. My robot learns information to drive from reading books or watching lectures. Humans don't have a navigation system, or machine learning, or GPS in their brains. They drive a car based on human intelligence and not by data structures from autonomous cars.

Borrowing knowledge from different skills

Let's say that the robot has mastered the skill of driving a car. Now the robot wants to drive a motorcycle. The robot knows that the majority of rules and goals of driving a car are similar to the rules and goals of driving a motorcycle.

While using the learning pathway to learn the new rules of driving a motorcycle, the robot will borrow driving knowledge from the car pathways. New rules and procedures will be stored in the motorcycle pathways. Using the practice pathways the robot will cement the linear instructions to the motorcycle pathways and to fuse both new and old rules/procedures.

After months of practicing to drive a motorcycle the robot's brain will have the optimal knowledge to drive on the road. FIG. 45D shows a decision making pathway to "use" the motorcycle pathways to drive on the road. In this case, the robot is driving a motorcycle and talking on the phone at the same time (which is dangerous).

As the reader can see Iím not using any AI methods from autonomous cars. My robot learns information to drive from reading books or watching lectures. Humans don't have a navigation system, or machine learning, or GPS in their brains. They drive a car based on human intelligence and not by data structures from autonomous cars.

Borrowing knowledge from different skills

Let's say that the robot has mastered the skill of driving a car. Now the robot wants to drive a motorcycle. The robot knows that the majority of rules and goals of driving a car are similar to the rules and goals of driving a motorcycle.

While using the learning pathway to learn the new rules of driving a motorcycle, the robot will borrow driving knowledge from the car pathways. New rules and procedures will be stored in the motorcycle pathways. Using the practice pathways the robot will cement the linear instructions to the motorcycle pathways and to fuse both new and old rules/procedures.

After months of practicing to drive a motorcycle the robot's brain will have the optimal knowledge to drive on the road. FIG. 45D shows a decision making pathway to "use" the motorcycle pathways to drive on the road. In this case, the robot is driving a motorcycle and talking on the phone at the same time (which is dangerous).

The robot using a skill to do different media

Let's say the robot mastered the skill of playing basketball and someone wanted the robot to play basketball in a video game. Another type of decision making pathway will be used to take pre-existing knowledge and adapting it with a skill like playing video games. Playing video games has its goals and rules and basketball has its goals and rules. The decision making pathway will fuse the two skills together.

If you think about it, a basketball game played on a video game is different from a real basketball game. For video games, all 6 players on the court are controlled by the player (the robot). This is completely different from real life basketball where the robot is an individual. Thus, the robot has to tweak the rules of basketball in order to adapt with video game rules.

Knowledge from teachers in school will create the decision making pathways. These decision making pathways will help the robot adapt 2 or more skills together.

--- deleted page from above.

Example of robot learning a new skill, playing chess, by itself without guidance from teachers.

The sentences below are the linear thoughts of the robot when it's trying to learn and play a new game: chess:

robot's thoughts:

goal: "learn to play chess".

recall fact: "in order to learn a new game i have to id 3 things: goals, rules, and procedures."

asking itself a question: "what are the rules, goals, and procedures of chess?"

seeking and providing itself with the answers: "the goal of chess is to take turns playing the game until someone's king is eliminated. while playing the game you have to follow all the rules. here are the rules. remember them and follow each one."

The robot using a skill to do different media

Let's say the robot mastered the skill of playing basketball and someone wanted the robot to play basketball in a video game. Another type of decision making pathway will be used to take pre-existing knowledge and adapting it with a skill like playing video games. Playing video games has its goals and rules and basketball has its goals and rules. The decision making pathway will fuse the two skills together.

If you think about it, a basketball game played on a video game is different from a real basketball game. For video games, all 6 players on the court are controlled by the player (the robot). This is completely different from real life basketball where the robot is an individual. Thus, the robot has to tweak the rules of basketball in order to adapt with video game rules.

Knowledge from teachers in school will create the decision making pathways. These decision making pathways will help the robot adapt 2 or more skills together.

--- deleted page from above.

Example of robot learning a new skill, playing chess, by itself without guidance from teachers.

The sentences below are the linear thoughts of the robot when it's trying to learn and play a new game: chess:

robot's thoughts:

goal: "learn to play chess".

recall fact: "in order to learn a new game i have to id 3 things: goals, rules, and procedures."

asking itself a question: "what are the rules, goals, and procedures of chess?"

seeking and providing itself with the answers: "the goal of chess is to take turns playing the game until someone's king is eliminated. while playing the game you have to follow all the rules. here are the rules. remember them and follow each one."

-----------

Language encapsulate entire pathways and allow the robot to learn different skills in a bootstrapping manner. FIG. 16A shows a diagram depicting how the robot learns to write a book. English sentences are used to encapsulate entire instructions, called pathways.

In FIG. 16A, first, the robot learns to write words and understand basic grammar rules (V1-V2). Next, he takes those skills to write a sentence (V3). Then he takes the knowledge of writing a sentence to write a paragraph (V4). Finally, the robot takes previous knowledge to write a book (V5). Notice in FIG. 16B, said robot is using previously learned skills to write a book. For example, he is repeatedly using basic grammar rules, writing sentences, and writing paragraphs. As you might recall, writing a paragraph requires writing several sentences and using basic grammar rules.

-----------

Language encapsulate entire pathways and allow the robot to learn different skills in a bootstrapping manner. FIG. 16A shows a diagram depicting how the robot learns to write a book. English sentences are used to encapsulate entire instructions, called pathways.

In FIG. 16A, first, the robot learns to write words and understand basic grammar rules (V1-V2). Next, he takes those skills to write a sentence (V3). Then he takes the knowledge of writing a sentence to write a paragraph (V4). Finally, the robot takes previous knowledge to write a book (V5). Notice in FIG. 16B, said robot is using previously learned skills to write a book. For example, he is repeatedly using basic grammar rules, writing sentences, and writing paragraphs. As you might recall, writing a paragraph requires writing several sentences and using basic grammar rules.

English sentences represent a task or sub-task. sentences is a fixed object that can represent a fuzzy or abstract concept. There are many cats in this world, coming in different sizes and shapes, but the word cat is a fixed object to represent a broad range of the species. In this case, sentences are used to represent or encapsulate very complex tasks. This type of encapsulation allows complex intelligence to form in the human brain, and enabling us to solve college level problems.

FIG. 11 depicts several examples of words and sentences representing abstract objects or actions. When the robot senses the target object, the meaning automatically activate in his mind.

English sentences represent a task or sub-task. sentences is a fixed object that can represent a fuzzy or abstract concept. There are many cats in this world, coming in different sizes and shapes, but the word cat is a fixed object to represent a broad range of the species. In this case, sentences are used to represent or encapsulate very complex tasks. This type of encapsulation allows complex intelligence to form in the human brain, and enabling us to solve college level problems.

FIG. 11 depicts several examples of words and sentences representing abstract objects or actions. When the robot senses the target object, the meaning automatically activate in his mind.

when the robot is making a decision and the sentence: "write a book in your spare times", activates in his mind, the sentence encapsulate all the complex instructions to write a book. That one sentence represents all the knowledge the robot needs to write a book. This sentence is also known as an internal instruction, given by the robot himself to take action.

when the robot is making a decision and the sentence: "write a book in your spare times", activates in his mind, the sentence encapsulate all the complex instructions to write a book. That one sentence represents all the knowledge the robot needs to write a book. This sentence is also known as an internal instruction, given by the robot himself to take action.

<< content

|

| |

|